- Mcp Cornell Resume

Mcp Cornell Resume

Content

MCP: Cornell Resume

A Model Context Protocol (MCP) server that automatically generates Cornell-style study notes and summaries from the conversational context, with RAG active recall question generation and Notion integration.

Features

The server processes text from the conversational context, generates contextual summaries, creates active recall questions (context-aware), and automatically saves everything to your Notion.

- Real-time Cornell-style note generation from the client chat conversation history

- Context-aware active recall question generation using vector similarity

- Semantic search integration with Pinecone for relevant note retrieval

- Automatic Notion database synchronization with proper block formatting

- OpenAI-powered text processing and question generation

Setup Guide

Requirements

- Python 3.13+

- uv

- OpenAI account

- Pinecone account

Installation

- Clone the repository

git clone

cd mcp-cornell-resume

- Install dependencies

# Create virtual environment

uv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

# Install required packages

uv pip sync docs/requirements.txt

- Environment Configuration - Create a

.envfile in the project root:

OPENAI_API_KEY=your_openai_api_key

PINECONE_API_KEY=your_pinecone_api_key

PINECONE_INDEX_NAME=cornell-notes

NOTION_API_KEY=your_notion_api_key

NOTION_DATABASE_ID=your_database_id

- Set up Notion

- Create a Notion Integration at the notion developers page (Set appropriate capabilities at minimum, select "Read content" and "Update content").

- Get your API Key

- Share Pages with your Integration.

- Get the database key of the page: You will find the database in in the link of the page.

- Configure Pinecone Index - Create a Pinecone index with:

- Dimension: 1536 (for OpenAI embeddings)

- Index name matching your

.envconfiguration

Usage

Using with Claude Client Desktop or other MCP-compatible applications

Add this to your MCP configuration JSON file:

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

{

"mcpServers": {

"resume_to_notion": {

"command": "/Users/<USER></USER>/.local/bin/uv",

"args": [

"--directory",

"/<PATH_TO_PROJECT/mcp-cornell-resume",

"run",

"main.py"

]

}

}

}

Available Tools

save_resume_to_notion

Summarize the full ongoing chat conversation in send it as 'text'.

Parameters:

text: text string a resume of the client chat window.

Return:

notion_page_id

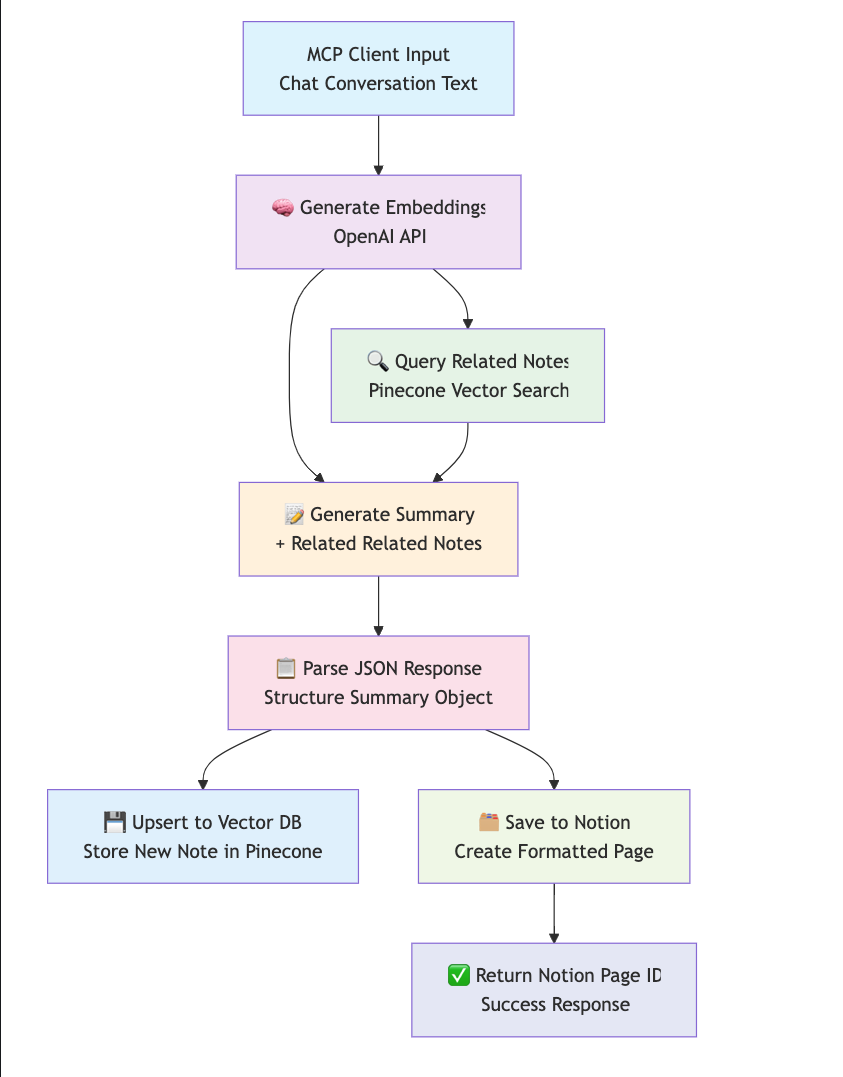

How It Works

Process Flow

- Input Processing: The MCP client sends chat conversation text to the

save_resume_to_notiontool - Embedding Generation: OpenAI creates vector embeddings from the input text content

- Context Retrieval: Pinecone searches for semantically similar existing notes using the embeddings

- Cornell Summary Generation: OpenAI generates a structured Cornell-style summary using:

- Original conversation content

- Related notes from Pinecone for context awareness

- Vector Storage: The new note is stored in Pinecone with its embeddings for future context retrieval

- Notion Integration: The formatted Cornell summary is saved to your Notion database

- Response: Returns the Notion page ID to the client

Visual Flow Diagram

Development

You can use the Model Context Protocol inspector to try out the server:

fastmcp dev main.py

Limitations and Future Improvements

- LLM Context Window Limitations: The MCP client has a finite context window (typically 8k-32k tokens depending on the model).

- If a chat session is too long, summarizing only the available context might lose important information.

- Latency in Multi-Integration Workflows: Each integration adds latency and increases the risk of slower processing.

- Notion and Pinecone Sync Complexity.

- Create a tag RAG feature for the notes.

Security Considerations

- API Key Management: Store all API keys securely in

.env - Input Validation: All inputs are sanitized and validated

- Error Handling: Sensitive information is never exposed in errors

- Access Control: Notion integration respects workspace permissions

- Data Privacy: No chat content is permanently stored without consent

License

MIT License

Server Config

{

"mcpServers": {

"resume_to_notion": {

"command": "/path-to-uv/uv",

"args": [

"--directory",

"/path-to-project/mcp-cornell-resume",

"run",

"main.py"

]

}

}

}Recommend Servers

TraeBuild with Free GPT-4.1 & Claude 3.7. Fully MCP-Ready.

Howtocook Mcp基于Anduin2017 / HowToCook (程序员在家做饭指南)的mcp server,帮你推荐菜谱、规划膳食,解决“今天吃什么“的世纪难题;

Based on Anduin2017/HowToCook (Programmer's Guide to Cooking at Home), MCP Server helps you recommend recipes, plan meals, and solve the century old problem of "what to eat today"

Y GuiA web-based graphical interface for AI chat interactions with support for multiple AI models and MCP (Model Context Protocol) servers.

Playwright McpPlaywright MCP server

Amap Maps高德地图官方 MCP Server

Baidu Map百度地图核心API现已全面兼容MCP协议,是国内首家兼容MCP协议的地图服务商。

MiniMax MCPOfficial MiniMax Model Context Protocol (MCP) server that enables interaction with powerful Text to Speech, image generation and video generation APIs.

Zhipu Web SearchZhipu Web Search MCP Server is a search engine specifically designed for large models. It integrates four search engines, allowing users to flexibly compare and switch between them. Building upon the web crawling and ranking capabilities of traditional search engines, it enhances intent recognition capabilities, returning results more suitable for large model processing (such as webpage titles, URLs, summaries, site names, site icons, etc.). This helps AI applications achieve "dynamic knowledge acquisition" and "precise scenario adaptation" capabilities.

DeepChatYour AI Partner on Desktop

Tavily Mcp

AiimagemultistyleA Model Context Protocol (MCP) server for image generation and manipulation using fal.ai's Stable Diffusion model.

TimeA Model Context Protocol server that provides time and timezone conversion capabilities. This server enables LLMs to get current time information and perform timezone conversions using IANA timezone names, with automatic system timezone detection.

WindsurfThe new purpose-built IDE to harness magic

EdgeOne Pages MCPAn MCP service designed for deploying HTML content to EdgeOne Pages and obtaining an accessible public URL.

ChatWiseThe second fastest AI chatbot™

BlenderBlenderMCP connects Blender to Claude AI through the Model Context Protocol (MCP), allowing Claude to directly interact with and control Blender. This integration enables prompt assisted 3D modeling, scene creation, and manipulation.

Visual Studio Code - Open Source ("Code - OSS")Visual Studio Code

Jina AI MCP ToolsA Model Context Protocol (MCP) server that integrates with Jina AI Search Foundation APIs.

CursorThe AI Code Editor

MCP AdvisorMCP Advisor & Installation - Use the right MCP server for your needs

Serper MCP ServerA Serper MCP Server